Managing authoritative and recursive name servers (i.e. DNS servers) at hopbox, has shown us a bunch of quirks and insights of domain name system.

Here's a list of them in no particular order:

trace full name recursion

+trace option with dig does full recursion showing each stage of name server delegation from root name server to TLD name server to domain authoritative name server:

# dig +trace hopbox.net

;; communications error to 9.9.9.9#53: timed out

; <<>> DiG 9.18.28-1~deb12u2-Debian <<>> +trace hopbox.net

;; global options: +cmd

. 7256 IN NS f.root-servers.net.

. 7256 IN NS g.root-servers.net.

. 7256 IN NS h.root-servers.net.

. 7256 IN NS i.root-servers.net.

. 7256 IN NS j.root-servers.net.

. 7256 IN NS k.root-servers.net.

. 7256 IN NS l.root-servers.net.

. 7256 IN NS m.root-servers.net.

. 7256 IN NS a.root-servers.net.

. 7256 IN NS b.root-servers.net.

. 7256 IN NS c.root-servers.net.

. 7256 IN NS d.root-servers.net.

. 7256 IN NS e.root-servers.net.

. 7256 IN RRSIG NS 8 0 518400 20250114170000 20250101160000 26470 . Dpc2m6PNj2gdfiO0RGvDAONDSPlPpfc+BYy947k/Nr4bPDiWTgNAxjAT bpU9nNUmnp91Catsz2hW6eSInVeboF3Ngu2YJSyOsp7J18ZWJ1HlRZmi d7kIY0/qqLzKv8FELtN9utLqr3JnT7asGe1AaJiqmsVaKaCjd6bEpE1v W/ouA91IFeSmFfPOBCBtSm7cRlbrwPQ5Nk+KZ+xbwJiJLg4hfHz+GGqZ bev18kbHG+TKL9lgD/RFc0QjMDA6sEvX2KEsU4YSnHOvDTK4/YxDKBs2 OZN+HWQKUaU5jlfAYUbiMlHH6fDhcqFSnv47kfirOZ/EegRsE4JdfQ2g xD0vVA==

;; Received 525 bytes from 9.9.9.9#53(9.9.9.9) in 288 ms

net. 172800 IN NS e.gtld-servers.net.

net. 172800 IN NS a.gtld-servers.net.

net. 172800 IN NS i.gtld-servers.net.

net. 172800 IN NS d.gtld-servers.net.

net. 172800 IN NS j.gtld-servers.net.

net. 172800 IN NS f.gtld-servers.net.

net. 172800 IN NS b.gtld-servers.net.

net. 172800 IN NS k.gtld-servers.net.

net. 172800 IN NS c.gtld-servers.net.

net. 172800 IN NS g.gtld-servers.net.

net. 172800 IN NS h.gtld-servers.net.

net. 172800 IN NS l.gtld-servers.net.

net. 172800 IN NS m.gtld-servers.net.

net. 86400 IN DS 37331 13 2 2F0BEC2D6F79DFBD1D08FD21A3AF92D0E39A4B9EF1E3F4111FFF2824 90DA453B

net. 86400 IN RRSIG DS 8 1 86400 20250115050000 20250102040000 26470 . N893DyeJ8VpUSOFqR8D5md0pYbjuu5osyYCSFU/rbJcD14e8nbLLgbP0 P23kQu5t75HknIBgujBPYCPu5gvoNJqal9k2TbbAfCaJYQL1Nyn7d+7X 7SW8rQnBZgNGgJ0OeBStF1MIcVmxmro581AmAt8vi8Zt/qhuR7j57djz 47dt2EYHcPWArM+MoiepAZF3sCmAOToNt/AXC7RphpPxKsw+csvnsGQB ln/Cs8exOT6ZzTFPCnJKA8MUIZAcUf7A3gmH2sbBrSZWD53S9ix3ALmz NFnve/Es+dv3dt6Gh5ezq1ENtlzicUKBkuAyAfCjQb3hCDR6rvBXttnK GIsBYg==

;; Received 1167 bytes from 2001:500:2f::f#53(f.root-servers.net) in 76 ms

hopbox.net. 172800 IN NS ns-146-a.gandi.net.

hopbox.net. 172800 IN NS ns-17-b.gandi.net.

hopbox.net. 172800 IN NS ns-241-c.gandi.net.

A1RT98BS5QGC9NFI51S9HCI47ULJG6JH.net. 900 IN NSEC3 1 1 0 - A1RTLNPGULOGN7B9A62SHJE1U3TTP8DR NS SOA RRSIG DNSKEY NSEC3PARAM

A1RT98BS5QGC9NFI51S9HCI47ULJG6JH.net. 900 IN RRSIG NSEC3 13 2 900 20250107030726 20241231015726 31059 net. GwLCxxuQ4CZwSQDd0s2kdMTqPFovriFLpLPpn68iueesfX5aRciPVNju xdQ/yDfj2hRAjG50vhQILBQQ2m/86g==

EGMA2VHP2FPMA6D3EIFRUKQ94J3FTVCB.net. 900 IN NSEC3 1 1 0 - EGMBDPOV3HTH4B18NND99572B5V8VU8H NS DS RRSIG

EGMA2VHP2FPMA6D3EIFRUKQ94J3FTVCB.net. 900 IN RRSIG NSEC3 13 2 900 20250106030031 20241230015031 31059 net. lO4ZFHTfS3MFScIk27f5rZm0rVX7BHspNek365M91J7hTCNr3wS62QQw 9yyT1OeXpOmDSwyCU+Jb+K+yIvvtpA==

;; Received 602 bytes from 192.31.80.30#53(d.gtld-servers.net) in 164 ms

hopbox.net. 10800 IN A 101.53.132.190

;; Received 55 bytes from 213.167.230.18#53(ns-17-b.gandi.net) in 24 ms

Notice in ;; Received ... in each step of the process, that tells which server is responding for that step of the process and their response before that.

Let's go through each step of the response:

In first level:

;; Received 525 bytes from 9.9.9.9#53(9.9.9.9) in 288 ms

my recursive resolver i.e. 9.9.9.9 responded for list of all root servers.

In second level:

;; Received 1167 bytes from 2001:500:2f::f#53(f.root-servers.net) in 76 ms

f.root-server.net responded with all .net. TLD name servers.

In third level:

;; Received 602 bytes from 192.31.80.30#53(d.gtld-servers.net) in 164 ms

d.gtld-servers.net responded with list of name servers for hopbox.net

In fourth level:

;; Received 55 bytes from 213.167.230.18#53(ns-17-b.gandi.net) in 24 ms

Long CNAME chain insanity

ecs.office.com is a Microsoft Office domain which seem to be behind 4 level of CNAME(ing):

# dig ecs.office.com

; <<>> DiG 9.18.28-1~deb12u2-Debian <<>> ecs.office.com

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 41779

;; flags: qr rd ra; QUERY: 1, ANSWER: 5, AUTHORITY: 0, ADDITIONAL: 1

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 1232

;; QUESTION SECTION:

;ecs.office.com. IN A

;; ANSWER SECTION:

ecs.office.com. 143 IN CNAME ecs.office.trafficmanager.net.

ecs.office.trafficmanager.net. 19 IN CNAME s-0005-office.config.skype.com.

s-0005-office.config.skype.com. 6644 IN CNAME ecs-office.s-0005.s-msedge.net.

ecs-office.s-0005.s-msedge.net. 214 IN CNAME s-0005.s-msedge.net.

s-0005.s-msedge.net. 214 IN A 52.113.194.132

;; Query time: 284 msec

;; SERVER: 9.9.9.9#53(9.9.9.9) (UDP)

;; WHEN: Thu Jan 02 11:16:02 IST 2025

;; MSG SIZE rcvd: 198

Would add name resolution latency penalty, but they would have their reasons.

The same behavior is observed in IPv6/AAAA record as well.

Too many A records

android.googleapis.com has atleast 15 A records:

# dig android.googleapis.com A

; <<>> DiG 9.18.28-1~deb12u2-Debian <<>> android.googleapis.com A

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 40939

;; flags: qr rd ra; QUERY: 1, ANSWER: 16, AUTHORITY: 0, ADDITIONAL: 1

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 512

;; QUESTION SECTION:

;android.googleapis.com. IN A

;; ANSWER SECTION:

android.googleapis.com. 219 IN A 142.250.183.10

android.googleapis.com. 219 IN A 142.250.66.10

android.googleapis.com. 219 IN A 142.250.183.138

android.googleapis.com. 219 IN A 142.250.70.42

android.googleapis.com. 219 IN A 142.250.70.74

android.googleapis.com. 219 IN A 142.250.182.202

android.googleapis.com. 219 IN A 142.251.42.106

android.googleapis.com. 219 IN A 142.250.70.106

android.googleapis.com. 219 IN A 142.250.199.170

android.googleapis.com. 219 IN A 142.250.199.138

android.googleapis.com. 219 IN A 142.251.42.74

android.googleapis.com. 219 IN A 142.250.183.170

android.googleapis.com. 219 IN A 142.250.77.74

android.googleapis.com. 219 IN A 142.250.183.202

android.googleapis.com. 219 IN A 142.250.71.106

android.googleapis.com. 219 IN A 142.250.182.234

;; Query time: 324 msec

;; SERVER: 9.9.9.9#53(9.9.9.9) (UDP)

;; WHEN: Thu Jan 02 11:49:42 IST 2025

;; MSG SIZE rcvd: 307

For AAAA records, we observed only 4 records though:

# dig android.googleapis.com AAAA

; <<>> DiG 9.18.28-1~deb12u2-Debian <<>> android.googleapis.com AAAA

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 23104

;; flags: qr rd ra; QUERY: 1, ANSWER: 4, AUTHORITY: 0, ADDITIONAL: 1

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 1232

;; QUESTION SECTION:

;android.googleapis.com. IN AAAA

;; ANSWER SECTION:

android.googleapis.com. 203 IN AAAA 2404:6800:4009:81e::200a

android.googleapis.com. 203 IN AAAA 2404:6800:4009:81f::200a

android.googleapis.com. 203 IN AAAA 2404:6800:4009:820::200a

android.googleapis.com. 203 IN AAAA 2404:6800:4009:81d::200a

;; Query time: 284 msec

;; SERVER: 9.9.9.9#53(9.9.9.9) (UDP)

;; WHEN: Thu Jan 02 11:50:38 IST 2025

;; MSG SIZE rcvd: 163

Short TTL of 5 seconds

AWS S3 seems to have started using TTLs of as low as 5 seconds for some of the domains like

- s3.ap-south-1.amazonaws.com

- s3-r-w.ap-south-1.amazonaws.com and

- s3-w.ap-south-1.amazonaws.com

The IPs seem to be rotating quite frequently as well:

# dig s3-r-w.ap-south-1.amazonaws.com

; <<>> DiG 9.18.28-1~deb12u2-Debian <<>> s3-r-w.ap-south-1.amazonaws.com

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 931

;; flags: qr rd ra; QUERY: 1, ANSWER: 7, AUTHORITY: 0, ADDITIONAL: 1

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 512

;; QUESTION SECTION:

;s3-r-w.ap-south-1.amazonaws.com. IN A

;; ANSWER SECTION:

s3-r-w.ap-south-1.amazonaws.com. 5 IN A 52.219.158.194

s3-r-w.ap-south-1.amazonaws.com. 5 IN A 3.5.213.197

s3-r-w.ap-south-1.amazonaws.com. 5 IN A 3.5.213.172

s3-r-w.ap-south-1.amazonaws.com. 5 IN A 52.219.62.71

s3-r-w.ap-south-1.amazonaws.com. 5 IN A 3.5.211.148

s3-r-w.ap-south-1.amazonaws.com. 5 IN A 16.12.36.18

s3-r-w.ap-south-1.amazonaws.com. 5 IN A 52.219.160.198

;; Query time: 292 msec

;; SERVER: 9.9.9.9#53(9.9.9.9) (UDP)

;; WHEN: Thu Jan 02 11:52:33 IST 2025

;; MSG SIZE rcvd: 172

No AAAA/IPv6 records, though.

This behavior can be observed across AWS regions endpoints.

The aaaaaaaaaaaaaaaaa domains in prod

Following are valid subdomains used in production by hotstar.com, an OTT provider in India owned by Disney:

- abh2p32aaaaaaaamaaaaaaaaaaaaa.live-ssai-cf-mum-ace.cdn.hotstar.com

- aba5q2faaaaaaaamaaaaaaaaaaaaa.hses7-vod-cf-ace.cdn.hotstar.com

- aba5q2faaaaaaaamaaaaaaaaaaaaa.hses7-vod-cf-ace.cdn.hotstar.com

Name server domain diversity

After he.net domain was put on hold causing this single domain issue to bring large part of their infrastructure down, having name server domain across diverse domains does help.

Amazon seems to have most domain diversity in their name servers.

For example, see S3 name servers:

# dig ns s3.amazonaws.com

; <<>> DiG 9.18.28-1~deb12u2-Debian <<>> ns s3.amazonaws.com

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 4181

;; flags: qr rd ra; QUERY: 1, ANSWER: 4, AUTHORITY: 0, ADDITIONAL: 1

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 512

;; QUESTION SECTION:

;s3.amazonaws.com. IN NS

;; ANSWER SECTION:

s3.amazonaws.com. 12769 IN NS ns-1300.awsdns-34.org.

s3.amazonaws.com. 12769 IN NS ns-1579.awsdns-05.co.uk.

s3.amazonaws.com. 12769 IN NS ns-63.awsdns-07.com.

s3.amazonaws.com. 12769 IN NS ns-771.awsdns-32.net.

;; Query time: 284 msec

;; SERVER: 9.9.9.9#53(9.9.9.9) (UDP)

;; WHEN: Thu Jan 02 13:42:57 IST 2025

;; MSG SIZE rcvd: 181

Let's expand on this:

- ccTLD diversity – .com, .org, .uk and .net is used (Side note – whole TLDs can go down too, see .bd example and .ru outage)

- second and third level domain diversity

As we keep on stumbling on more observations in domain name system, we'll keep on posting more of DNS chronicles.

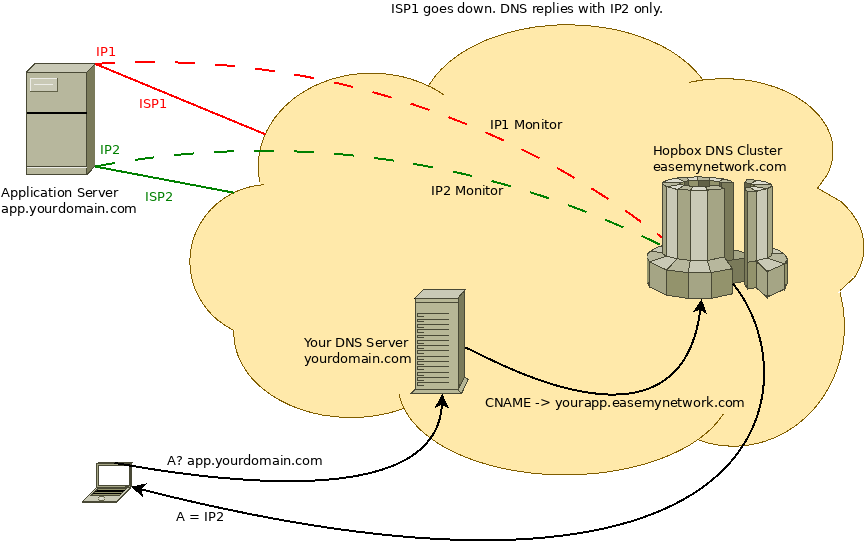

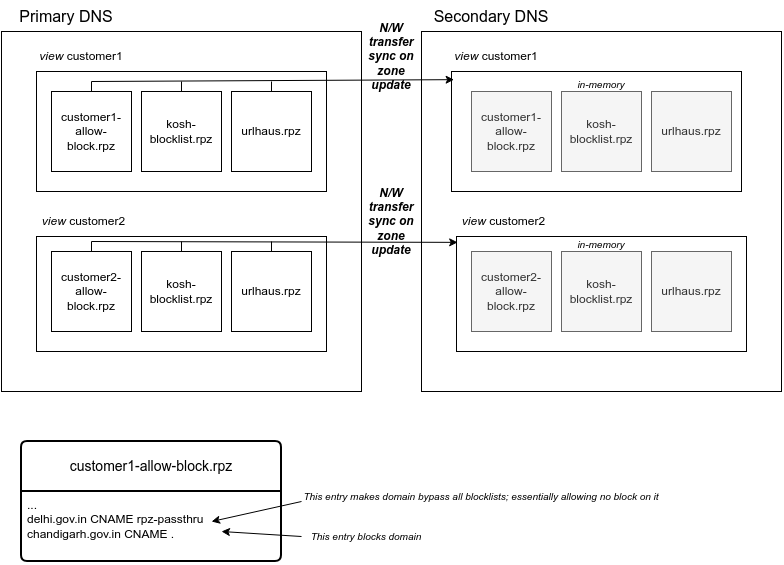

At Hopbox, we're working to make your networks autonomous. Need help with Managing you growing network or DNS, Contact us!.