Custom DNS based blocking for enterprise networks using BIND 9

Domain Name System (DNS) can be an effective tool to block access to certain domains in an enterprise setting. This can also help prevent intended or unintended access to known malicious domains.

At Hopbox, we wanted to incorporate customer specific DNS based blocking while also protecting against ransomware, malware, and other newly discovered threats for 900+ locations across customer networks.

Getting the response actually takes multiple back and forth in DNS server hierarchy. A DNS recursive resolver traverses the DNS hierarchy starting from root DNS servers to TLD authoritative servers to domain authoritative servers to get DNS response.

We settled on BIND 9 as our DNS recursive resolver, as it's tried and tested and developed by ISC and used by many of the Internet root DNS servers.

Response Policy Zones

Initially, we tried serving a redirect but that essentially isn't possible without breaking HTTPs and end client trusting our custom TLS certificates, so we choose to serve NXDOMAIN to queries. For routing/serving NXDOMAIN on intended queries, Response Policy Zones (RPZ) were used to collate block lists and allow lists for BIND 9. Most block list providers nowadays provide block lists in RPZ format, which we directly feed into BIND.

Sample RPZ file:

$TTL 3600

@ IN SOA localhost. root.localhost. (

1 ; serial

1h ; refresh

1h ; retry

1w ; expiry

1h ) ; minimum

IN NS localhost.

example.org CNAME IN rpz-passthru.

Views

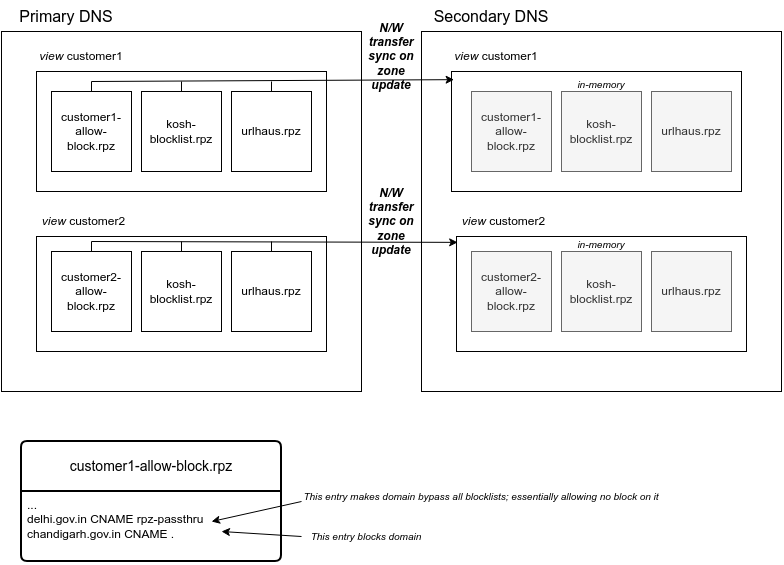

We also wanted customer specific allow and block lists. In DNS terms, we wanted to serve a custom zone for each customer. Concept of Views was according to our requirement. View essentially allowed us to serve custom zones on matching clients source IP (customer networks). We created one View for each customer with their specific allow and block lists.

Secondary DNS setup

For redundancy, we setup-ed multiple secondary server which sync zone files. The primary server sends notification and zone transfer whenever its zones are updated. The Secondary DNS server automatically reloads the updated zone on receiving a full zone transfer.

On primary, the following was added in BIND 9 config:

allow-trasfer { SECONDARY_SERVER_IP; };

also-notify { SECONDARY_SERVER_IP; };

For each zone definition, the following was added:

master yes;

notify yes;

allow-transfer { SECONDARY_SERVER_IP; }

On secondary, following was added in BIND 9 config, for each zone definition, following was added:

type slave;

masters { PRIMARY_SERVER_IP; };

Deployment

As DNS server is added in dnsmasq in our OpenWrt based Hopbox gateway device, all DNS queries were routed to our DNS recursive resolver installation. If a user searches for a blocked domain, NXDOMAIN would be served for the query, essentially blocking access. Additionally, dnsmasq then caches the response on Hopbox gateway, thus speeding up the process.

Ansible was used to push changes to Hopbox. Right now, the DNS server is functional for 650+ customer locations, serving 240k requests/hour and blocking 4.5k requests/hour on the network.

At Hopbox, we're working to make your networks autonomous. Need help with Managing you growing network or DNS, Contact us!.